You paste your prompt into ChatGPT, Copilot, or Claude. You get code back. You copy it into your project. It works... mostly. But here's the uncomfortable truth: you have no idea if that was the best solution.

Every AI model has blind spots. GPT-4 might over-engineer a simple function. Claude might miss an edge case. DeepSeek might write terse code that's hard to maintain. When you rely on a single model, you're gambling that its particular quirks align with your needs.

We built RespCode because we were tired of gambling.

Fast, secure, production-ready code through multi-model orchestration.

The Single-Model Problem

Here's what happens when you use a traditional AI coding assistant:

- You write a prompt

- One model generates one solution

- You accept it (or tweak your prompt and try again)

- You move on, hoping it was good enough

This workflow has a fundamental flaw: you never see the alternatives. What if Claude's solution was cleaner? What if DeepSeek's approach was more performant? What if GPT-4 caught a bug the others missed?

"The best code comes from iteration and comparison, not from accepting the first answer you receive."

Enter Multi-Model Orchestration

RespCode takes a fundamentally different approach. Instead of asking one AI and hoping for the best, we let you orchestrate multiple models and compare their outputs.

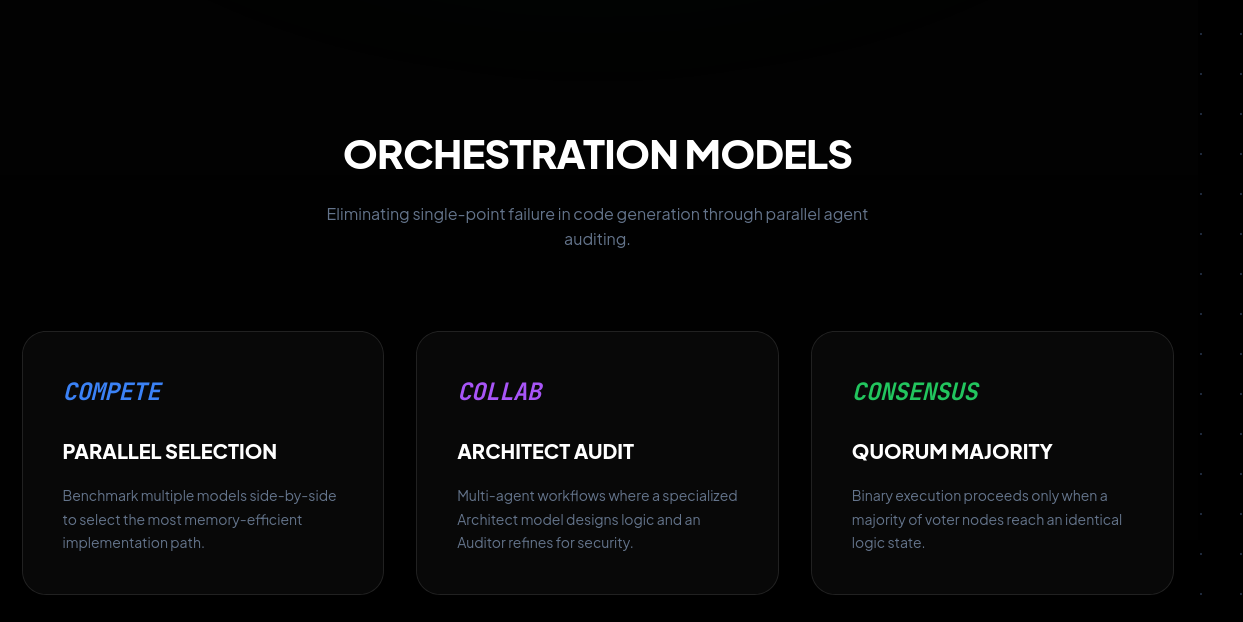

We've built three distinct modes, each solving a different problem:

🏆 Compete Mode: Let the Best Solution Win

Send your prompt to Claude, GPT-4o, DeepSeek, and Gemini simultaneously. In seconds, you see all four solutions side-by-side in a 2×2 grid. Each one runs in a real sandbox, so you can compare not just the code, but the actual output.

This is incredibly powerful for:

- Learning — See how different models approach the same problem

- Quality — Pick the cleanest, most maintainable solution

- Debugging — When one model's code fails, another's might reveal why

- Performance — Compare execution characteristics across implementations

🔄 Collaborate Mode: Iterative Refinement

Sometimes the best solution comes from iteration. Collaborate Mode chains models together in a refinement pipeline: DeepSeek drafts → Claude refines → GPT-4o polishes.

Each model sees the previous model's output and improves upon it. You see every stage, so you understand exactly how the code evolved.

⚡ Consensus Mode: Democratic Code Generation

For critical code, why trust just one opinion? Consensus Mode runs all models in parallel, then uses Claude to analyze all outputs and synthesize the best parts into a merged solution.

Think of it as an AI-powered code review where four senior developers each write their solution, then a fifth reviews all of them and produces the definitive version.

Real Sandboxes, Real Results

Code generation is only half the battle. You need to know if the code actually works.

Every piece of code generated in RespCode runs in a real sandbox environment. Not simulated. Not mocked. Real compilation, real execution, real output.

x86_64

Native speed via Daytona sandboxes

ARM64

Native ARM via Firecracker microVMs

RISC-V

Emerging architecture via QEMU

ARM32

Embedded systems development

This means you can generate code, run it, see the output, and iterate—all without leaving the IDE. When Compete Mode shows you four solutions, you're not just comparing code syntax. You're comparing actual execution results.

Human in the Loop: Why It Matters

There's a growing trend in AI tools toward full automation. "Let the AI handle it." "Just trust the output." "AI knows best."

We think this is dangerous.

AI models are powerful but imperfect. They hallucinate. They have biases. They make mistakes that a human would catch in seconds. The solution isn't to remove humans from the loop—it's to give humans better tools for supervision.

RespCode is built on the principle of transparent AI:

- You see all the options, not just one

- You see the execution results, not just the code

- You see each stage of refinement, not just the final output

- You make the final decision, not the AI

When you use RespCode, you're not just accepting AI output. You're supervising, comparing, and deciding. That's how AI development tools should work.

Who Is RespCode For?

We built RespCode for developers who:

- Want quality over speed — You'd rather spend 30 seconds comparing solutions than 30 minutes debugging a bad one

- Work on production systems — Where "good enough" isn't good enough

- Are learning — Seeing how different models approach problems is incredibly educational

- Don't fully trust AI — And want tools that embrace healthy skepticism

- Build for multiple platforms — x86, ARM, RISC-V, embedded systems

Try It Yourself

The best way to understand RespCode is to try it. We offer free credits to get started—no credit card required.

Send the same prompt to four models. Compare the results. Run them in real sandboxes. See for yourself why multi-model orchestration produces better code.

100 free credits • No credit card required

The Future of AI-Assisted Development

We believe the future isn't about replacing developers with AI. It's about giving developers superpowers through AI—while keeping them firmly in control.

Single-model tools are a stepping stone. Multi-model orchestration is the destination.

Welcome to RespCode. Let's build better software together.